In parallel computing, achieving faster and more efficient processing is a top priority. Two key paradigms driving this are Single Instruction, Multiple Data (SIMD) and Single Instruction, Multiple Threads (SIMT). While they share similarities in enabling parallelism, their underlying architectures and use cases differ significantly. This article explores what SIMD and SIMT are, how they compare, and which might be better for different scenarios.

What is SIMD?

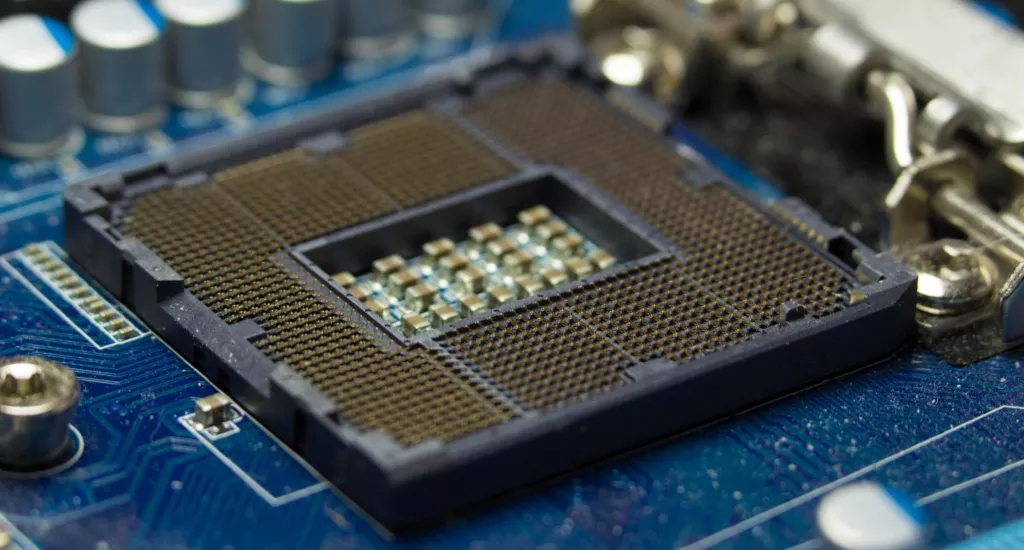

SIMD is a parallel computing model where a single instruction operates on multiple data points simultaneously. It is commonly implemented in CPUs and vector processors. SIMD works by dividing data into smaller chunks and processing them concurrently using a single control unit.

Applications:

- Image and video processing

- Signal processing

- Scientific simulations

- Cryptographic computations

Key Features:

- Efficient handling of data-level parallelism

- Low control overhead

- Built into CPU instruction sets (e.g., SSE, AVX)

What is SIMT?

SIMT, on the other hand, is a parallel execution model used in GPUs, where multiple threads execute the same instruction simultaneously but can handle different data. Each thread operates independently within a warp or wavefront, and divergence (different execution paths within a warp) can impact performance.

Applications:

- Graphics rendering

- Machine learning

- High-performance computing (HPC) tasks

- Large-scale data processing

Key Features:

- Threads are grouped into warps for execution

- Flexibility to handle task-level parallelism

- High scalability in GPUs (e.g., CUDA, OpenCL)

Comparing SIMD and SIMT

| Feature | SIMD | SIMT |

|---|---|---|

| Execution Unit | Single control unit for data | Independent threads within warps |

| Hardware | CPUs, vector processors | GPUs |

| Parallelism Type | Data-level parallelism | Task-level parallelism |

| Programming Complexity | Lower | Higher |

| Divergence Handling | Not applicable | Performance loss in diverging warps |

| Performance | Better for structured data | Better for diverse and scalable tasks |

Which is Better?

The choice between SIMD and SIMT depends on the application and the hardware available:

- SIMD is better for tasks involving regular, structured data like matrix operations or audio processing. It is efficient on CPUs and excels in scenarios where data alignment and uniformity are crucial.

- SIMT is better for tasks requiring high scalability, such as deep learning and 3D rendering. GPUs leverage SIMT for their massive parallelism, making them ideal for tasks that benefit from a large number of concurrent threads.

SIMD and SIMT are both essential tools in the parallel computing toolbox, tailored for different types of workloads. SIMD is a simpler model ideal for tasks with predictable data patterns, while SIMT shines in more complex, large-scale applications. Choosing the right one depends on understanding the workload’s nature and leveraging the strengths of the hardware.